If you’re wondering what AI upscaling is and how it works, this guide is for you.

In this article, I’ll take you through everything you need to know about AI upscaling and the tech behind it.

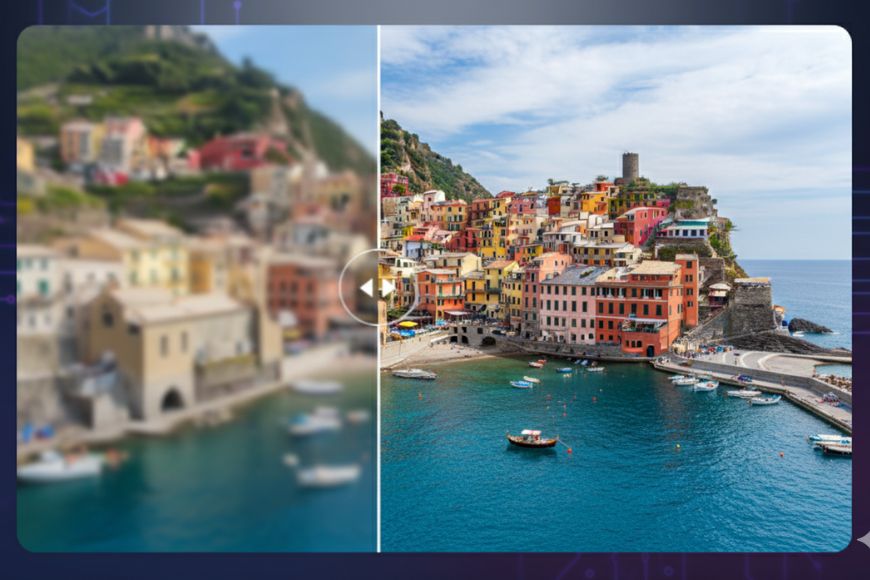

Remember the photos from your old digicam or early smartphone? Back then, they looked fine, but on today’s high-resolution screens, they seem pixelated and unsharp.

You might have tried resizing them in Photoshop only to see them turn blurry or blocky.

Ever wondered if there’s a way to turn them into high-resolution, sharp photos that would look great on modern-day screens?

That is where AI upscaling steps in. Instead of just stretching pixels, it analyzes the photo and rebuilds detail that was never really there in the low-resolution version. The result is a file that looks much closer to a native high-resolution image.

AI upscaling has gone from being a niche feature to something that photographers, designers and even casual users are starting to rely on.

Whether it’s restoring old family photos, making a large print from a cropped shot, or cleaning up video footage for modern displays, the technology is quickly becoming part of everyday workflows.

In this article, I’ll break down how AI upscaling really works, the types of AI models behind it, its real-world applications, and where the tech is headed in the future.

Side note: We’ve also reviewed the best AI upscaling tools here, so check that post out if you want to find out the pros, cons and pricing of different apps.

Now, let’s get started.

What Is AI Upscaling?

AI upscaling is the process of enlarging an image or video using artificial intelligence.

The goal is to make the enlarged version look sharper, clearer, and more natural than what traditional resizing methods could achieve.

Older methods like bicubic or bilinear resizing work by stretching existing pixels and filling the gaps with mathematical averages. The result is an image that is technically larger but usually soft, pixelated, or full of artifacts when pushed too far.

AI approaches this differently. Instead of stretching pixels, it analyzes the content of the image and predicts what the missing detail should look like.

Think of it like completing a puzzle: traditional upscaling just enlarges the existing pieces, making the gaps more noticeable, while AI draws on its experience of thousands of similar puzzles to create new, matching pieces that fit perfectly.

The outcome is an image that has more pixels and more “believable” detail. Grass looks like grass, skin looks like skin, and text stays legible instead of breaking down into blocks.

Let’s look more closely at the differences between AI and traditional image upscaling.

AI Upscaling vs Traditional Upscaling

Traditional upscaling methods rely on mathematical interpolation. The most straightforward of those methods is nearest-neighbor resizing, which works by copying the value of the closest pixel.

Bilinear interpolation takes a step forward by averaging the four closest pixels, which creates smoother transitions but also leads to visible blurring.

The bicubic method expands on this by using information from 16 surrounding pixels and applying a curve-fitting process to generate new values.

It produces smoother, slightly sharper results than bilinear, yet it still breaks down when an image is enlarged too much.

The limitation with these traditional methods is that they can only generate information based on existing pixels.

For example, when you enlarge a photo of grass, interpolation can only stretch the green tones and blend them together, resulting in a soft path of color with no real texture.

However, AI upscaling tackles it differently. Instead of mathematical interpolation that stretches pixels, it relies on trained models that have learned to recognize patterns.

Taking the above example of enlarging a patch of grass, AI upscaling would predict the structure of the grass blades and rebuild them in a way that looks natural.

The same applies to text, which is redrawn with crisp, clean edges, and to portraits, where features such as eyelashes or skin texture are regenerated with AI.

The Technology Behind AI Upscaling

At the core of AI upscaling are machine learning models trained on millions of images.

Some of these models are also shown pairs of images: one low-resolution and the other high-resolution. By comparing the two, they learn how fine details should look when enlarged.

When you run your own photo through an AI upscaler, the model applies this learning to predict the missing pixels.

It does not copy from the training images. Instead, it recognizes patterns and generates new detail that fits naturally with the existing content.

This is what separates AI upscaling from traditional interpolation. Interpolation simply averages pixel values to guess what should go in between.

Deep learning models can identify that a blurry patch might be hair, bricks, or grass, and then reconstruct pixels that look like realistic hair strands, brick edges, or blades of grass.

The difference in results is striking. Interpolation stretches the image, whereas AI understands and recreates the details.

Types of AI Upscaling Models

AI upscaling is not a single method. Several model types power the tools we use today, each with its own strengths. Below are some of the model types used in AI upscaling.

Convolutional Neural Networks (CNNs)

CNNs are the foundation of many image-related AI systems. They work by scanning small patches of an image and learning how to rebuild detail in each patch.

CNNs are efficient and good at enhancing edges, sharpening details, and improving overall clarity.

Many of the first consumer upscalers were based on CNNs because they’re stable and relatively fast.

Generative Adversarial Networks (GANs)

GANs use two networks that work against each other. One generates a higher-resolution image while the other evaluates how real it looks compared to authentic high-resolution examples.

Through this contest, the generator learns to create increasingly lifelike detail.

GANs often produce images with richer texture and more natural realism than CNNs alone, which makes them well-suited for photography and video.

Transformers and Diffusion Models

Transformers, which reshaped natural language processing, can capture long-range relationships across an entire image.

This makes them strong at preserving consistency across complex scenes.

Diffusion models, best known for powering AI image generation, reconstruct images step by step, gradually improving resolution while reducing noise. Both approaches show promise for the next generation of upscaling.

AI Upscaling Use Case Scenarios

Different people find value in AI upscaling for different reasons. Here are a few common scenarios.

- Restoring old photos: Family albums and scanned prints often look small on today’s screens. Upscaling makes them clearer and suitable for sharing online or printing.

- Making large prints: A photo from a crop-sensor camera or even a smartphone can be enlarged enough to print at poster size or bigger.

- Cropping flexibility: Wildlife or sports photographers can crop tighter into the action and still keep enough resolution for professional use.

- Video restoration: Old camcorder clips or HD footage can be upscaled to 4K or 8K, making them suitable for modern displays.

- Creative projects: Designers use upscaling to prepare assets for billboards, games, or digital art where resolution is critical.

Benefits of AI Upscaling

AI upscaling offers several clear advantages over older methods. Below are some of the most prominent benefits of using AI for upscaling.

Higher-quality enlargements

The most obvious benefit is sharper and clearer images. AI can turn a small file into something that looks good on large screens or in print, without the soft or blocky look of interpolation.

Preserves texture and sharpness

AI models are trained on real-world textures such as skin, fabric, hair, or foliage. Enlarged images therefore retain these qualities in a way that feels natural.

In practice, this means portraits look lifelike, landscapes maintain depth, and product shots hold up even under close inspection.

Handles difficult images better

Low-light photos, compressed JPEGs, and older digital images often fall apart when enlarged with traditional methods.

AI does a better job of cleaning up noise and enhancing edges, making these files more usable.

Flexibility for different needs

Photographers can crop tighter without losing quality. Designers can repurpose older assets for new projects. Casual users can make old snapshots look decent on modern displays.

Limitations & Challenges

Despite its strengths, AI upscaling comes with some trade-offs. Let’s discuss some of the most common issues.

Hallucinated details

Because AI predicts pixels, it sometimes invents detail that was never there. A blurry sign might become legible, but not necessarily accurate.

This is harmless in personal use but a concern in fields where authenticity matters, such as journalism or forensics.

Processing power

Running these models requires strong hardware or cloud services. On a laptop without a dedicated GPU, a single large image can take several minutes.

Upscaling video is even more demanding. Cloud tools remove some of the burden but raise concerns about privacy and internet speed.

Ethical Boundaries

The line between restoration and manipulation can blur. A family portrait restored for print is one thing, but a historical or legal photo altered by AI is another.

Deciding when enhancement crosses into fabrication is a challenge that will only grow as the technology improves.

The Future of AI Upscaling

The technology is advancing quickly and is likely to move deeper into everyday devices and services.

Built into capture devices

Smartphones and cameras are starting to include AI features for sharpening and noise reduction. Upscaling could be the next step, allowing images and video to be enhanced in real time at the moment of capture.

Real-time video upscaling

Streaming platforms and gaming engines are already experimenting with real-time upscaling.

Movies shot at lower resolution could be played back at 4K without massive storage requirements. Games could render at a lower resolution while AI fills in the detail instantly, saving processing power.

Next-generation models

Transformer-based and diffusion-based models are likely to drive the next wave of improvements. They offer better consistency across images and more control over accuracy, reducing the risk of unnatural artifacts.

Best Practices for AI Upscaling

If you want to get the most from AI upscaling, a few practical steps make a big difference.

- Start with the best file available: Use raw files if possible. Even a slightly higher-quality input improves the final result.

- Only enlarge as much as you need: Oversizing can make details look unnatural. Pick a scale that matches your output.

- Match the model to the image: Portraits respond better to face-focused models, while graphics or scanned text need sharper edge models.

- Preview carefully: Look at hair, skin, or grass before exporting. If they look plasticky, adjust the settings or try another model.

- Watch your storage: Enhanced DNGs and TIFFs can be massive. Be mindful when batch processing.

- Combine with other edits: Upscaling works best alongside noise reduction and sharpening.

- Keep expectations realistic: AI can enhance, but it cannot recreate details that were never there.

Final Words

AI upscaling has transformed how we approach images and video. What once looked too small or too rough can now be made sharp enough for modern screens and even large prints.

It’s not flawless, and it should be used with moderation, but it is one of the most practical ways AI is helping creative work today.

As the technology matures and integrates into cameras, phones, and streaming platforms, it will likely become something we use without even thinking about it.

For now, knowing how it works and what it can do helps you make the most of a tool that bridges the gap between the past and the present.

Credit : Source Post